The case for rate-based queuing & the problems with one-in-one-out

In bringing queues to the online world, we had to reconsider the age-old logic behind them. Find out how our rate-based approach to queuing helps organizations improve their wait experience and sell product faster, and why one-in-one-out queuing is based on a misunderstanding of site capacity.

When we speak with prospective customers, they often ask, “Does Queue-it work by letting a new visitor onto our site each time an existing visitor leaves it?”

What they’re asking about is one-in-one-out queuing.

One-in-one-out queues (or session-based queues) operate by giving a new visitor access each time an existing visitor leaves. In theory, this method should allow you to keep total site visitors completely consistent.

We understand why people assume Queue-it operates through one-in-one-out. It’s intuitive. It sounds simple. It’s how physical queues typically work at restaurants, bars, and theme parks.

But the comparison only goes so far. The online world and the physical world have several key differences that create the need for an alternative approach to queuing.

That’s why Queue-it—along with most other virtual waiting room providers—is built for and around rate-based queuing.

Rate-based queues (or flow-based queues) operate by giving a consistent number of new visitors access within a set time interval. This means the number of visitors arriving on the site remains consistent regardless of the total site visitors.

In this blog, you’ll discover why one-in-one-out is based on a misunderstanding of site capacity, the shortcomings of the approach, and how rate-based queuing allows you to sell product faster while delivering a better wait experience.

In a nutshell, the one-in-one-out approach has technical shortcomings that impact the efficiency and success of your events:

- By counting inactive and active users in the same way, you accumulate inactive users on your site, resulting in longer wait times for customers and slower sell-through of product.

- By sending visitors to your site in large and inconsistent groups, you lose the ability to provide accurate wait time for customers, create inconsistent progress, and place unnecessary strain on key bottlenecks.

- By relying on two-way communication between the queuing system and your systems, you create an additional dependency/point of failure and need to use extra compute resources to maintain up-to-date info.

When people think of site capacity, they often imagine a room. This room, they think, can fit a fixed number of people in it before it becomes full (or crashes).

When interpreted this way, one-in-one-out makes perfect sense. One visitor leaves, a new one enters, and you consistently keep the room at its maximum capacity.

But this interpretation rests on a few false assumptions about how the internet works—that “total concurrent users” is a reliable metric, that every visitor places the same load on your system, and that websites have an “exit door” you can monitor.

So instead of a room, we like to think of website capacity like a supermarket.

When most people imagine a supermarket queue, they picture the checkout line. Why?

Because you don’t queue to enter the supermarket. You queue to check out.

The total number of people in the supermarket isn’t what defines the length of supermarket queues.

What does define the line length is:

- How many people want to check out per minute

- How many purchases the shop can handle per minute

These two numbers determine how many customers you’ll need to queue and how fast those queues will be—not the size of your supermarket or the number of visitors in it.

There are three key reasons:

- Not every supermarket visitor makes a transaction or has the same demands. The father and his three children don’t make the same number of transactions as the four businesspeople who’ve just come to get milk, so you can’t count them in the same way. Doing so leads to a scenario where you accumulate families while making the businesspeople wait longer to get in (because the businesspeople come and go quickly, while the families linger in the aisles).

- Supermarkets aren’t worried about the 1,000 people they can technically cram into their store; they’re worried about the 100 people who want to make their purchase at once. The bottleneck in a supermarket—the place where flow becomes restricted—is at the checkout.

- While it’s easy and reliable to track transactions digitally, it’s tough to track the total number of people in a store. You’d need a staff member at the entrance and exit counting foot traffic.

These same three reasons apply to websites. Your website isn’t a room you can simply fill to capacity, then swap visitors in and out of, because:

- One site visitor is not the same as one transaction. Many visitors who enter your site never make a purchase. These users who don’t transact remain "on" your site for longer than those who do, meaning they accumulate in number throughout your sale. By reserving space for these non-converting or inactive users, you increase wait times and slow down your sales.

- Transactions—not total visitors—are where capacity is typically limited, and transactions occur as a flow. You need to think of your capacity in terms of how many visitors your bottlenecks can handle per minute, not how many visitors your site can hold at a fixed point. One-in-one-out ignores the importance of consistent throughput, meaning you cannot give customers a wait time estimate, deliver smooth progress, or ensure a consistent flow of activity to your bottlenecks.

- There is no “website exit” you can monitor to determine how many people are leaving. You can set up various rules to kick visitors off your site when they confirm their order or logout, but these just create a dependency between systems, increase the complexity and resources required for set-up, and invite user error. Plus, even with these rules, you’ll never detect every inactive user. Users can lose their internet connection, lock their phones, or even close their tab—and they’ll still appear as active sessions until they’re timed out. That’s why when you’re using a security-conscious application such as online banking, they’ll send a notification after ten minutes of idle activity saying, “Hi, are you still here?”. Unless a user makes a request (i.e. clicks a button), you cannot know what they’re doing on the other end.

It's a misunderstanding of website capacity that makes one-in-one-out so alluring. It’s the assumption that the real-world scenarios we experience in our everyday lives translate perfectly to the online world.

But these assumptions and misunderstandings can have real business consequences—including slower sales, a worse customer experience, and more work for your teams and your systems.

By now, the shortcomings of one-in-one-out should be clear. But for those of you who aren’t convinced (or just want to dig deeper into the numbers), let’s walk through a concrete example.

RELATED: Virtual Waiting Room Buyer’s Guide: How to Choose Your Online Queue System

To understand how the problems of one-in-one-out play out in a real-life scenario, let’s look at a typical sales scenario:

- Your capacity is 1,000 total visitors

- Your average user journey is five minutes

- Sessions are set to timeout after 20 minutes of inactivity

- And we’ll assume 20% of visitors become inactive or do not make a purchase, which is a generous 80% conversion rate

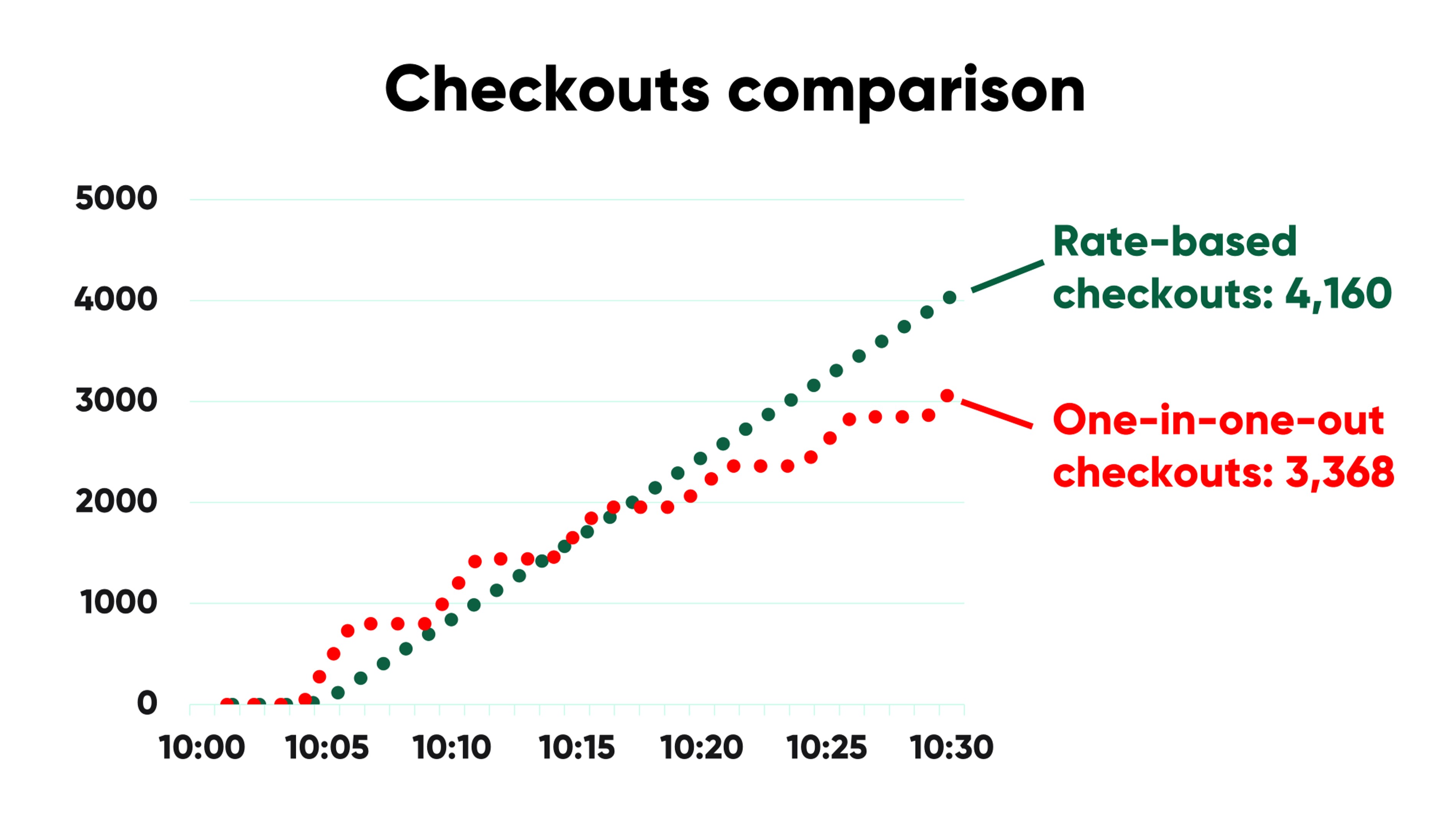

30 minutes into sale, these are your key metrics with one-in-one-out vs. with rate-based queuing:

|

|

One-in-one-out |

Rate-based |

Difference |

|

Total visitors given access |

4,856 |

6,200 |

28% more with rate-based |

|

Total checkouts |

3,368 |

4,160 |

24% more with rate-based |

|

Average number of active users across period |

|

|

|

|

Accurate wait time estimate |

|

|

Better user experience with rate-based |

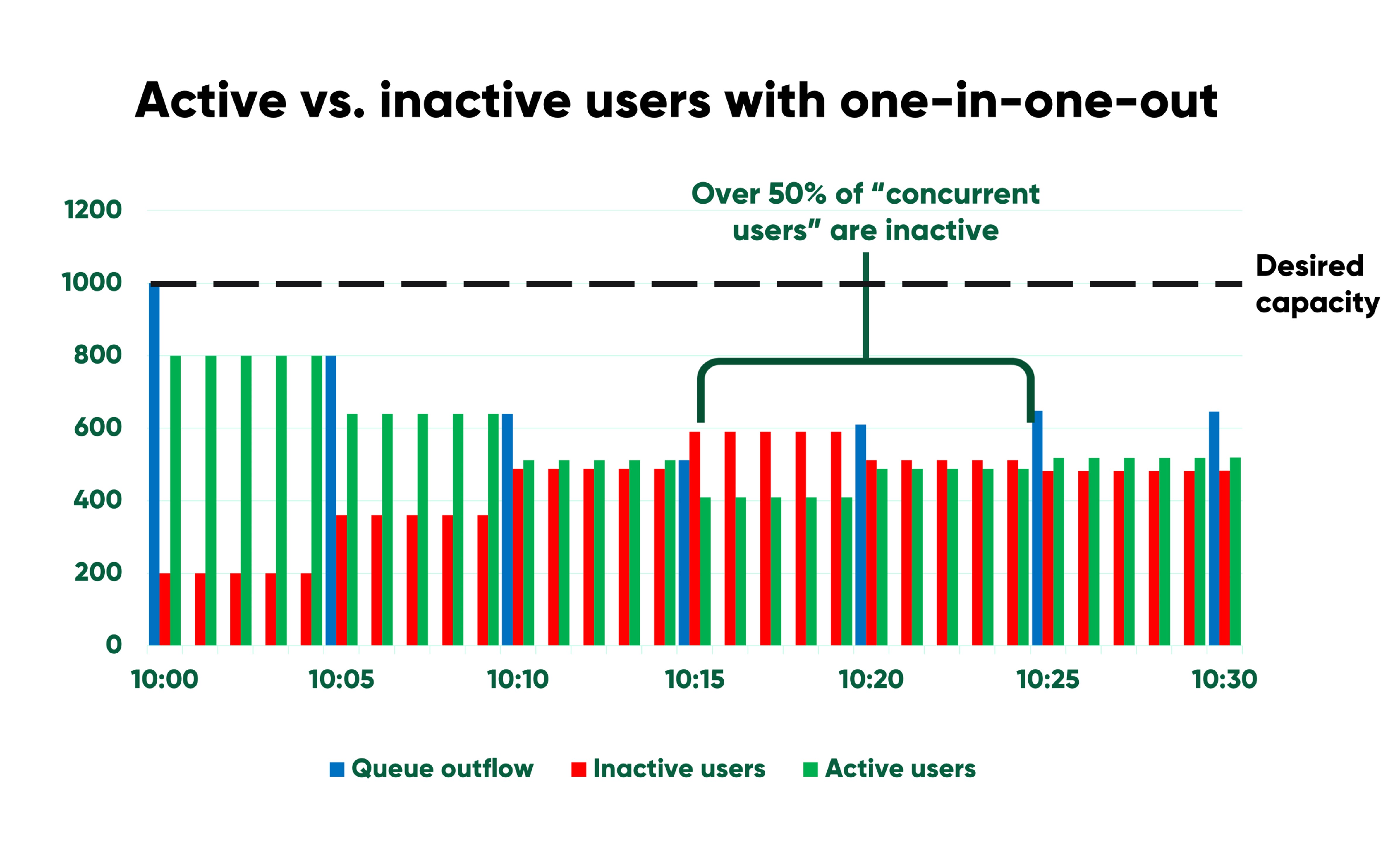

When your one-in-one-out sale goes live at 10:00, you let in 1,000 visitors—800 are active and 200 are inactive.

At 10:05, the first 800 check out, so you let in a new 800. Again, this group consists of 640 active sessions (80% of 800) and 160 inactive sessions (20% of 800).

At 10:10, your 640 active visitors check out, and it’s time for a new batch. This time, you get 512 active visitors, and 128 inactive visitors.

Now let’s have a look at your site, fifteen minutes into your sale, at its “maximum capacity” of 1,000. There are just 410 visitors (80% of 512) interacting with your site and moving through the user journey. The other 590 “spots” on your site are held by visitors who aren’t actually there.

This means, for a full ten minutes, from 10:15-10:25, your website is processing over 50% fewer visitors than it could be. That means lower efficiency, longer wait times, and slower sales.

Active users are coming and going quickly, while inactive users are accumulating in number until you time them out (that’s why we see a drop at 10:20).

You could shorten the timeout window, but you’d open yourself up to complaints from customers who get booted off the site because they take time to reset their password, find their wallet, or because they temporarily lost internet access.

A few other shortcomings to note in this scenario are that:

- You cannot provide accurate estimated wait times or consistent progress to customers in queue—both of which are fundamental tenets of queue psychology.

- Your bottleneck (the checkout) is potentially processing up to 800 visitors one minute, then 0 visitors the next, rather than processing a constant flow.

- Your outflow rate is contingent on constant communication between your systems and your queuing solution.

RELATED: The Psychology of Queuing Revealed in 6 Simple Rules

Results with one-in-one-out after 30 minutes

- 4,856 visitors given access

- 3,368 checkouts

- 560 average active users (56% of what you can handle)

- Inaccurate or non-existent wait time estimate for customers and inconsistent progress

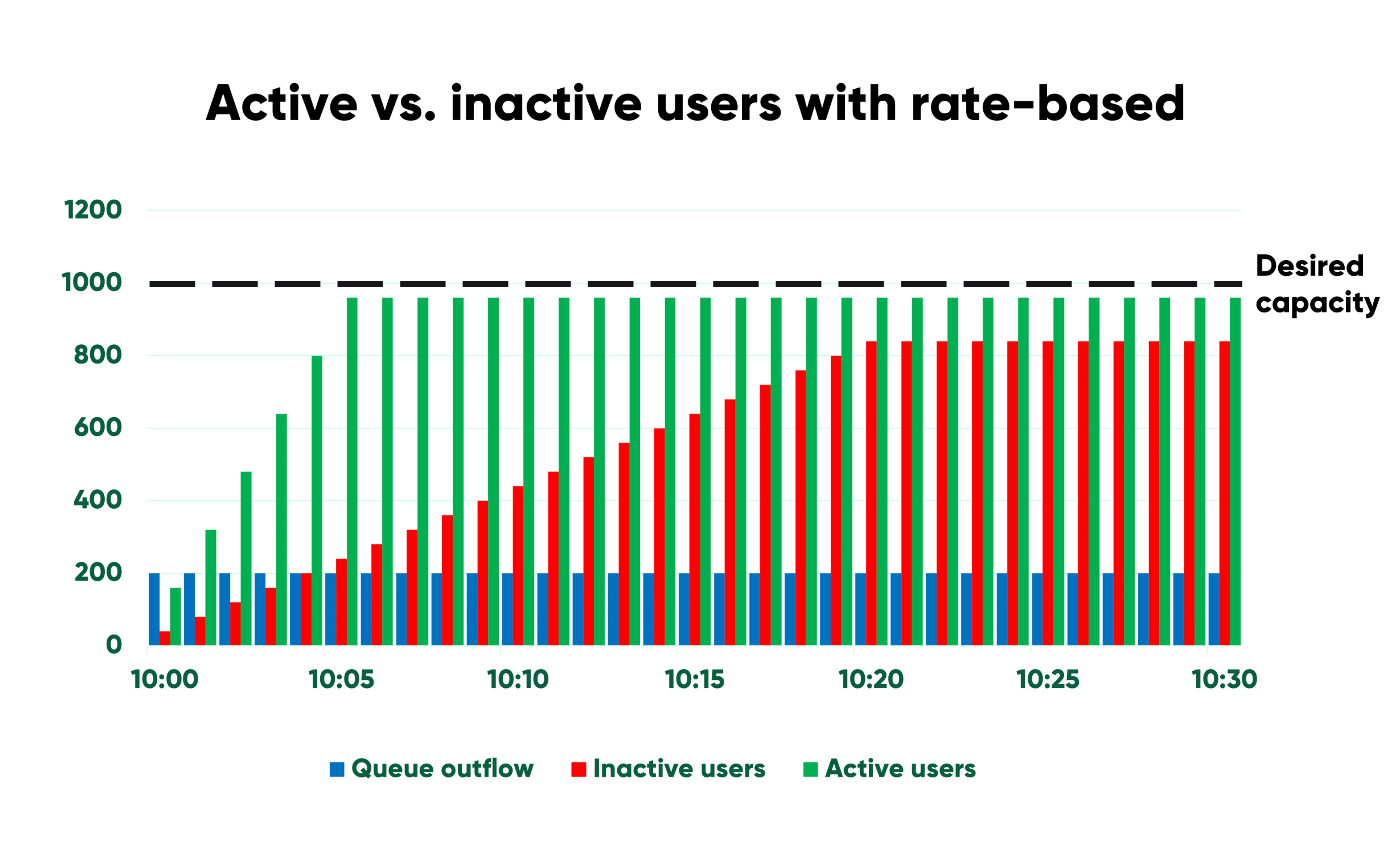

If you were to come to Queue-it with the above scenario, we’d apply Little’s Law (L = Wλ) from queuing theory to determine your outflow, where:

- L = maximum number of concurrent sessions (1,000)

- W = average session length (5 minutes)

- λ = arrival rate (L/W, or 1000/5 = 200 visitors per minute)

By applying this formula, we can see that giving access to 200 visitors per minute for the duration of your event will allow you to quickly reach and maintain your max capacity.

This consistent arrival rate has obvious benefits for the user experience, because it allows you to provide accurate wait time estimates and ensure each user experiences continual progress.

But it also has benefits for your sales and your systems. Because even when accounting for the 20% of visitors who become inactive, rate-based queuing allows you to hold more active visitors (960) while preserving lower and more consistent throughput on your bottlenecks (160 per minute). And it does so without requiring any comms between systems!

When comparing checkouts, the rate-based approach initially looks slower. At 10:05, you have just 160 checkouts. But like the tortoise and the hare, the trick of rate-based queuing is its consistency, because every minute after 10:05 brings with it another 160 checkouts—meaning by the end of the 30 minutes, your sales are 24% higher.

Results with rate-based queuing after 30 minutes

- 6,200 visitors given access (28% more than with one-in-one-out)

- 4,160 checkouts (24% more than with one-in-one-out)

- 883 average active users (58% higher than with one-in-one-out)

- Every visitor gets an accurate wait time estimate and consistent progress

With the rate-based approach, you process more visitors, sell more products, and provide a smoother user experience—all with a simpler system and set-up.

When our founders were developing the first virtual waiting room, they considered the one-in-one-out approach. To them, like to many people, it sounded reasonable. But while mapping out the solution, they quickly saw the shortcomings we list above.

That’s why Queue-it is designed around the same rate-based queuing theory approach that’s used in manufacturing, transportation, and project management.

Queue-it’s rate-based approach is not what most people think of when they think of queuing. But that’s because when most people think of queuing they think of the in-person queues they’ve been in. When they think of capacity, they think of it as a room or a glass of water, with fixed capacity limit defined by equal, static units.

Rate-based queuing recognizes the idiosyncrasies of the online world and accounts for them. When compared with one-in-one-out, rate-based queuing:

- Processes more visitors and drives more sales in a similar amount of time

- Ensures progress is continual and wait times are accurate

- Is simple, requiring no two-way communication or additional dependencies

- Accounts for inactive users by baking them into the formula

- Creates a flow of traffic that more closely matches typical load tests